Introduction

In this series, we are going to dive deep into the low-level system to understand what input/output (I/O) actually is. I am going to write some code to demonstrate it to you and try to be as practical as possible.

From my point of view, read and write are just the operations to transfer data from one buffer to another. If you understand the fundamental stuff, then it would be very easy for you to tackle any problems in complex systems that you might have in the future.

Once you finish this series, you will know how Nginx proxy, Redis, Go routines, NodeJS event loop, and many modern technologies implement the idea of these I/O models.

What is an I/O operation?

I shamelessly copied the definition from Wikipedia

In computing, input/output (I/O, i/o, or informally io or IO) is the communication between an information processing system, such as a computer, and the outside world, such as another computer system, peripherals, or a human operator. Inputs are the signals or data received by the system and outputs are the signals or data sent from it. The term can also be used as part of an action; to “perform I/O” is to perform an input or output operation.

For simplicity, in the context of an operating system in terms of I/O resources, an operating system (OS) such as Linux manages various system resources used to perform I/O operations.

Below are some system resources used to perform I/O operations:

- Files

- Pipes

- Network sockets

- Devices

What is a file descriptor

I/O resources are identified by file descriptors in Linux

Many I/O resources, such as files, sockets, pipes, and devices, are identified by a unique ID called file descriptor (FD). In addition, in Unix-like OS, each process contains a set of file descriptors. Each process should have 3 standard POSIX file descriptors. The operating system (OS) uses PID as the process identifier to allocate CPU, memory, file descriptors, and permissions to it.

| Integer | Integer value | File stream | Located at |

|---|---|---|---|

| 0 | Standard input | stdin | /proc/PID/fd/0 |

| 1 | Standard output | stdout | /proc/PID/fd/1 |

| 2 | Standard error | stderr | /proc/PID/fd/2 |

File descriptor usage

Let’s consider the system call called read() (https://man7.org/linux/man-pages/man2/read.2.html)

#include <unistd.h>

ssize_t read(int fd, void buf[.count], size_t count);The first argument of the system call read() requires a file descriptor. This file descriptor could belong to files, pipes, sockets, or devices. Once it has determined which type of resource to read from, it begins filling the buffer starting at buf argument.

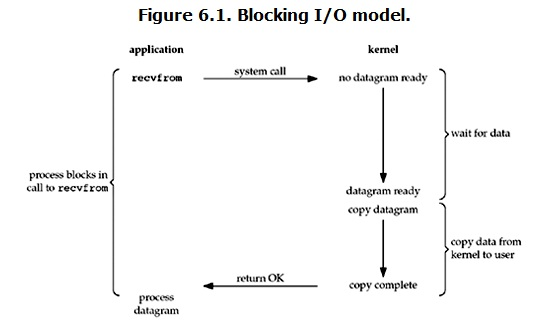

Model 1: Blocking I/O model

Process using the blocking model is blocked when performing I/O operation. It waits for the data to become available in the I/O resource, and then the kernel copies them from kernel space to user space (application space). These 2 steps completely block the current thread from running other tasks.

Blocking I/O model drawbacks

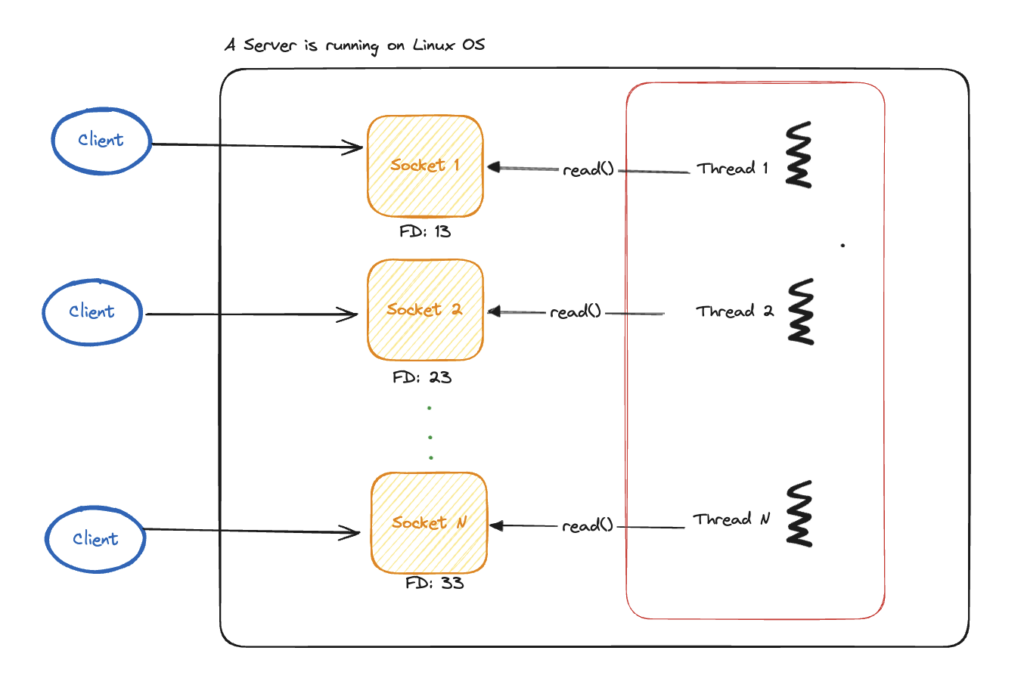

Imagine we have 1000 sockets to read data from, then we have to spawn corresponding 1000 threads, it is totally a waste of resources for this approach as a socket could be empty and there is no data for a thread to read from. As a consequence, this thread still blocks the whole process as data is not yet represented in the socket. In the case half of the number of threads are in the sleep state, then we can know that we are putting 5000 threads holding connection to socket that do nothing into SLEEP state. OS has to remember the state of these threads to perform context switching to put threads on and off, this operation sometimes takes an intensive resource to perform which finally leads to system degradation.

Show me the code

Here is an example of read() system call blocks other threads. Full code implementation is in here

Main.c

#include <stdio.h>

#include "blockingio.h"

int main() {

readBlockingIO();

return 0;

}#ifndef BLOCKINGIO_H

#define BLOCKINGIO_H

int readBlockingIO();

#endif#include "blockingio.h"

#include <unistd.h>

#include <fcntl.h>

#include <stdio.h>

#include <stdlib.h>

#include <pthread.h>

// Function that performs non-blocking work

void* thread_callback(void* arg) {

for (int i = 0; i < 5; i++) {

printf("Other thread is working...n");

sleep(1); // Simulate work with a 1-second delay

}

return NULL;

}

int readBlockingIO() {

int fd;

char buffer[100];

pthread_t other_thread;

ssize_t bytesRead;

// Open a file in read-only mode

fd = open("example.txt", O_RDONLY);

if (fd == -1) {

perror("Failed to open file");

exit(1);

}

// This read() function blocks the current thread until it is done

bytesRead = read(fd, buffer, sizeof(buffer) - 1);

if (bytesRead == -1) {

perror("Failed to read file");

close(fd);

exit(1);

}

// Create a new thread after read() operation is completed

if (pthread_create(&other_thread, NULL, thread_callback, NULL) != 0) {

perror("Failed to create thread");

return 1;

}

// Null-terminate the buffer to treat it as a string

buffer[bytesRead] = '';

// Print the contents of the buffer

printf("Read %ld bytes: %sn", bytesRead, buffer);

// Close the file descriptor

close(fd);

// Wait for the other thread to finish

if (pthread_join(other_thread, NULL) != 0) {

perror("Failed to join thread");

return 1;

}

return 0;

}Output

gcc -o program main.c blockingio.c -pthread && ./program

[1] 15045

[1] + 15045 done gcc -o program main.c blockingio.c -pthread

Read 38 bytes: hello from the other side of the world

Other thread is working...

Other thread is working...

Other thread is working...

Other thread is working...

Other thread is working..Model 2: Non-blocking I/O model

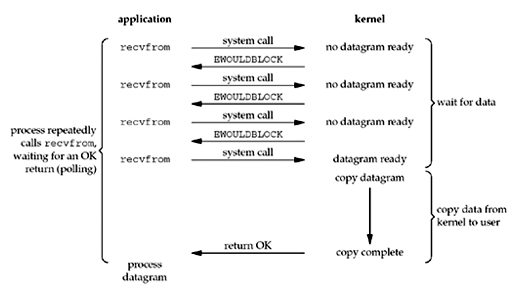

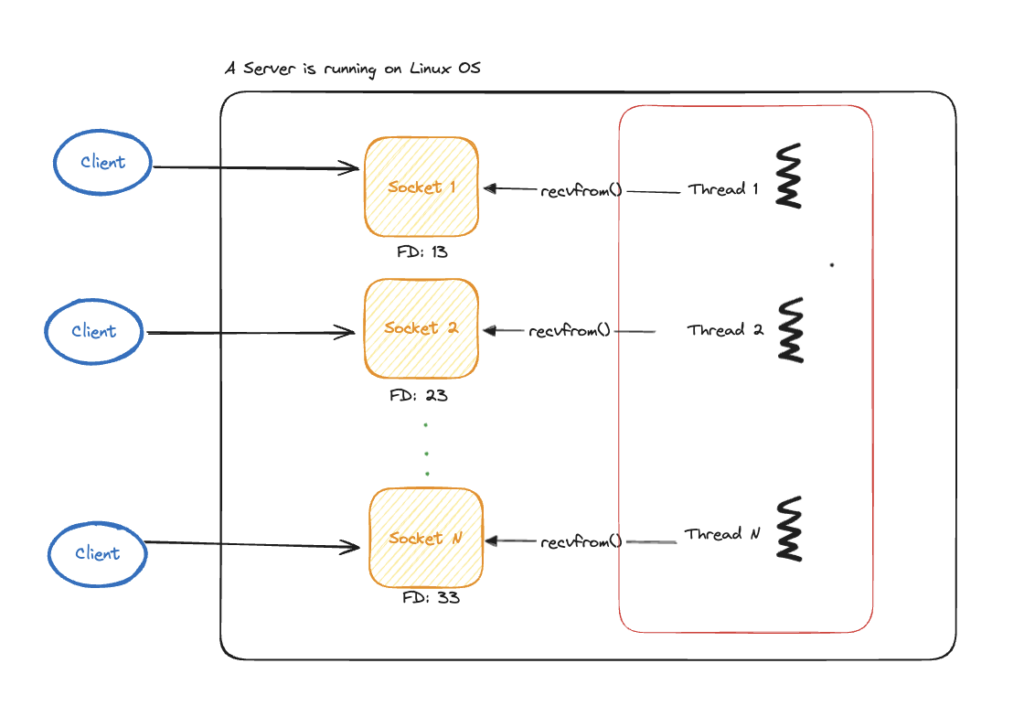

In blocking the IO model, putting the thread to the sleep state while waiting for the data to become available in the socket is not efficient, especially in the case we have a lot of threads doing this. To avoid putting it to sleep state, another model called the non-blocking I/O model can solve this.

A thread once asks each socket if the data becomes available to read from (polling). If the data has not yet become available in the socket, instead of putting the application thread into the sleep state, the kernel returns an error called EWOULDBLOCK to the application thread. The application thread receives this error and it indicates there is no data to read from yet, then it repeatedly issues another request to the kernel until data becomes available. When the data is ready to read from, it copies the data from the kernel to user space to handle.

Drawbacks

However, in this non-blocking I/O model, the thread acts as an event loop that has to block all the sockets because it proactively queries for the data. Besides, this approach often wastes CPU resources for polling the data from sockets. And to be honest with you, I don’t see any improvement in this model compared to blocking the I/O model because the main thread is blocked while waiting for the data to be copied from the kernel to the application space.

Show me the code

To use this model, you need to set all the sockets to O_NONBLOCK flag in fcntl(2) function.

The full source code of the implementation of this model is in here

Server.c

Set non-blocking for a socket

// Set non-blocking mode for the socket

int set_non_blocking(int sockfd) {

int flags = fcntl(sockfd, F_GETFL, 0);

if (flags == -1) {

perror("fcntl(F_GETFL)");

return -1;

}

flags |= O_NONBLOCK;

if (fcntl(sockfd, F_SETFL, flags) == -1) {

perror("fcntl(F_SETFL)");

return -1;

}

return 0;

}Implementing a UDP server that receives a datagram from the client

// Set the socket to non-blocking mode

if (set_non_blocking(sockfd) == -1) {

close(sockfd);

return 1;

}

// Define server address

server_addr.sin_family = AF_INET;

server_addr.sin_addr.s_addr = INADDR_ANY;

server_addr.sin_port = htons(8081);

// Bind the socket to the specified port and IP address

if (bind(sockfd, (struct sockaddr *)&server_addr, sizeof(server_addr)) == -1) {

perror("bind failed");

close(sockfd);

return 1;

}

printf("Non-blocking UDP server is running on port %d...n", 8081);

// Event loop to repeatedly receive data from the socket

while (1) {

int received_byte = recvfrom(sockfd, applicationBuffer, sizeof(applicationBuffer), 0, (struct sockaddr *)&client_addr, &client_len);

if (received_byte > 0) {

applicationBuffer[received_byte] = ''; // Null-terminate the string

printf("Received message: %sn", applicationBuffer);

char client_ip[INET_ADDRSTRLEN];

inet_ntop(AF_INET, &client_addr.sin_addr, client_ip, sizeof(client_ip));

printf("Message from client: %s:%dn", client_ip, ntohs(client_addr.sin_port));

} else if (received_byte == -1) {

if (errno == EAGAIN || errno == EWOULDBLOCK) {

// No data available yet. Come back later...

usleep(500); // Sleep for 500ms and retry

} else {

perror("recvfrom failed");

break;

}

}

}

close(sockfd); // Close the socket after breaking out of the loopClient.c – sending a message to the server

#define SERVER_PORT 8081

#define SERVER_IP "127.0.0.1"

#define BUFFER_SIZE 1024

int main() {

int sockfd;

struct sockaddr_in server_addr;

char *message = "Hello, server!";

char buffer[BUFFER_SIZE];

socklen_t addr_len = sizeof(server_addr);

// Create a TCP socket

sockfd = socket(AF_INET, SOCK_DGRAM, 0);

if (sockfd == -1) {

perror("socket failed");

return 1;

}

// Define the server address to send data to

memset(&server_addr, 0, sizeof(server_addr));

server_addr.sin_family = AF_INET;

server_addr.sin_port = htons(SERVER_PORT);

// Convert IP address to binary form and assign it

if (inet_pton(AF_INET, SERVER_IP, &server_addr.sin_addr) <= 0) {

perror("inet_pton failed");

close(sockfd);

return 1;

}

// Send a message to the server

int bytes_sent = sendto(sockfd, message, strlen(message), 0,

(struct sockaddr *)&server_addr, sizeof(server_addr));

if (bytes_sent == -1) {

perror("sendto failed");

close(sockfd);

return 1;

}

printf("Sent message to server: %sn", message);

close(sockfd);

return 0;

}

Result

Server

➜ non-blocking-io git:(master) ✗ gcc -o program main.c nonblockingio.c && ./program

[1] 29039

[1] + 29039 done gcc -o program main.c nonblockingio.c

Non-blocking UDP server is running on port 8081...

Received message: Hello, server!

Message from client: 127.0.0.1:56528Client

➜ client git:(master) ✗ gcc -o program main.c && ./program

[1] 30428

Sent message to server: Hello, server!Model 3: Multiplexing I/O model

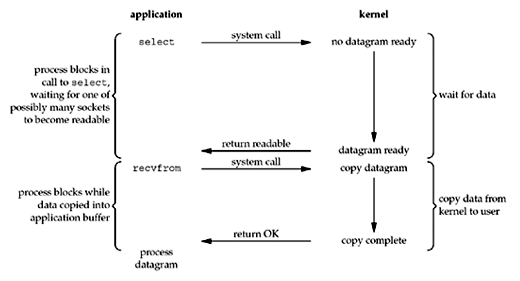

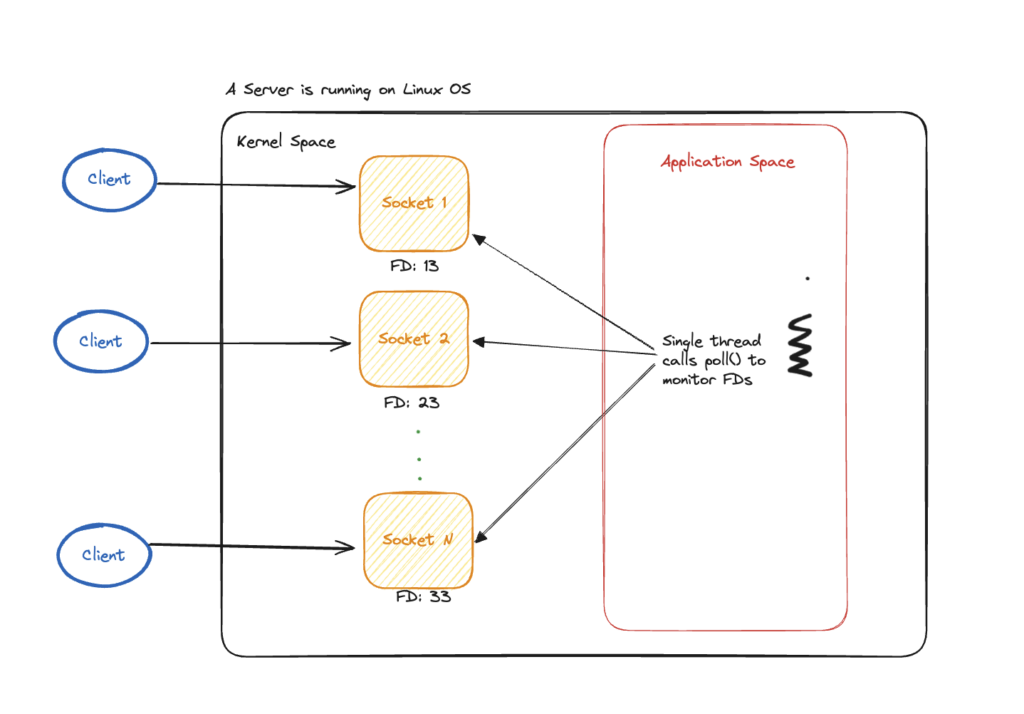

In Linux, to implement a multiplexing I/O model, we begin by using the select or poll system call to check the state of file descriptors (FDs). Next, we invoke the recvfrom system call to retrieve data from the kernel, which is then copied from kernel space to user space.

In contrast to the non-blocking I/O model, which repeatedly checks the state of a single file descriptor, the multiplexing I/O model can monitor multiple file descriptors, sockets, …etc simultaneously using select or poll. Once the data is ready to read from a socket, for example, the kernel will notify the application by returning success to the select syscall. Then it uses recvfrom syscall to read the copied data diagram from the socket by the kernel.

I guess the reason why this model is called “multiplexing” I/O model because all requests are multiplexed to go to a single thread.

Compared to the blocking I/O model or non-blocking I/O, it still blocks the process when waiting for the availability of the data in the socket and the process of getting the data from the socket. Moreover, it takes two syscalls to achieve the same result as the blocking I/O model and the non-blocking I/O model. The only advantage it has is the ability to monitor multiple file descriptors at once, which is infeasible in a blocking or non-blocking I/O model. I must clarify that I am referring to the use of a single thread only, as it is possible to monitor multiple FDs in a blocking or non-blocking I/O model that uses multiple threads to make these calls.

Show me the code

You can find the full source code here

if (setsockopt(sockets[i], SOL_SOCKET, SO_REUSEPORT, &optval,

sizeof(optval)) == -1) {

perror("setsockopt(SO_REUSEPORT) failed");

close(sockets[i]);

return 1;

}

Since Linux 3.19, it is possible to bind several sockets to the same port of a server using SO_REUSEPORT option (you can read more about this option here: SO_REUSEPORT). However, it is the operating system’s responsibility to route requests across configured sockets and hence it is not guaranteed to distribute requests to each socket equally. For this reason, it is hard for me to demo the capability of multiplexing I/O if I only bind multiple sockets to the same port. Hence, I will create 5 sockets with a port range from 8081 to 8085 for simplicity.

| Object | Objective |

| Client | Send requests to 5 sockets with port from 8081 to 8085 |

| Server | Listening to these 5 sockets using Linux poll(2) syscall and copy the data from kernel space to application space and print out the message. |

Server.c

int read_multiplexing_IO() {

int MAX_EVENTS = 10;

int BUFFER_SIZE = 1024;

int MAX_SOCKETS = 5;

int sockets[MAX_SOCKETS];

char applicationBuffer[BUFFER_SIZE];

struct sockaddr_in server_addr, client_addr;

socklen_t client_len = sizeof(client_addr);

// Initialize socket array

for (int i = 0; i < MAX_SOCKETS; i++) {

sockets[i] = socket(AF_INET, SOCK_DGRAM, 0);

if (sockets[i] == -1) {

perror("socket");

return 1;

}

// Define server address for each socket

server_addr.sin_family = AF_INET;

server_addr.sin_addr.s_addr = INADDR_ANY;

server_addr.sin_port = htons(8081 + i); // Assign a different port for each socket

// Bind the socket to the specified port and IP address

if (bind(sockets[i], (struct sockaddr *)&server_addr, sizeof(server_addr)) == -1) {

perror("bind failed");

close(sockets[i]);

return 1;

}

printf("Socket %d is running on port %d...\n", i, 8081 + i);

}

// Set up the poll structure

/*

struct pollfd {

int fd; file descriptor

short events; requested events

short revents; returned events

};

*/

// https://man7.org/linux/man-pages/man2/poll.2.html

struct pollfd fds[MAX_SOCKETS];

for (int i = 0; i < MAX_SOCKETS; i++) {

fds[i].fd = sockets[i];

fds[i].events = POLLIN; // POLLIN signal rerpresents data is avaialble to read.

}

while (1) {

printf("Waiting for client");

int poll_count = poll(fds, MAX_SOCKETS, -1); // Wait indefinitely for events

if (poll_count == -1) {

perror("poll failed");

break;

}

for (int i = 0; i < MAX_SOCKETS; i++) {

if (fds[i].revents & POLLIN) {

// Data is ready to be read on socket i

int received_byte = recvfrom(sockets[i], applicationBuffer, sizeof(applicationBuffer) - 1, 0,

(struct sockaddr *)&client_addr, &client_len);

if (received_byte > 0) {

applicationBuffer[received_byte] = '\0'; // Null-terminate the string

printf("Received message on socket %d: %s\n", i, applicationBuffer);

char client_ip[INET_ADDRSTRLEN];

inet_ntop(AF_INET, &client_addr.sin_addr, client_ip, sizeof(client_ip));

printf("Message from client on socket %d: %s:%d\n", i, client_ip, ntohs(client_addr.sin_port));

} else if (received_byte == -1) {

perror("recvfrom failed");

}

}

}

}

return 0;

}Client.c

int client_socket;

int message_count = 5000; // Number of messages to send

int SERVER_PORT = 8081;

char SERVER_IP[] = "127.0.0.1";

int BUFFER_SIZE = 1024;

int PORT_RANGE = 5;

struct sockaddr_in server_addr;

char buffer[BUFFER_SIZE];

// Create a UDP socket

client_socket = socket(AF_INET, SOCK_DGRAM, 0);

if (client_socket < 0) {

perror("Socket creation failed");

exit(EXIT_FAILURE);

}

// Set up the server address structure

server_addr.sin_family = AF_INET;

if (inet_pton(AF_INET, SERVER_IP, &server_addr.sin_addr) <= 0) {

perror("Invalid server IP address");

close(client_socket);

exit(EXIT_FAILURE);

}

// Send multiple messages to the server

for (int i = 0; i < message_count; i++) {

int random_port = SERVER_PORT + (rand() % PORT_RANGE); // Random port between 8081 and 8085

server_addr.sin_port = htons(random_port);

snprintf(buffer, BUFFER_SIZE, "Message %d from client", i + 1);

ssize_t sent_bytes = sendto(client_socket, buffer, strlen(buffer), 0,

(struct sockaddr *)&server_addr, sizeof(server_addr));

if (sent_bytes < 0) {

perror("sendto failed");

} else {

printf("Sent: %s\n", buffer);

}

}Result from server

Received message on socket 4: Message 4998 from client

Message from client on socket 4: 127.0.0.1:63620

Waiting for clientReceived message on socket 0: Message 4948 from client

Message from client on socket 0: 127.0.0.1:63620

Received message on socket 1: Message 4974 from client

Message from client on socket 1: 127.0.0.1:63620

Received message on socket 2: Message 4886 from client

Message from client on socket 2: 127.0.0.1:63620

Waiting for clientReceived message on socket 0: Message 4951 from client

Message from client on socket 0: 127.0.0.1:63620

Received message on socket 1: Message 4975 from client

Message from client on socket 1: 127.0.0.1:63620

Received message on socket 2: Message 4889 from client

Message from client on socket 2: 127.0.0.1:63620Model 4: Signal-driven I/O model

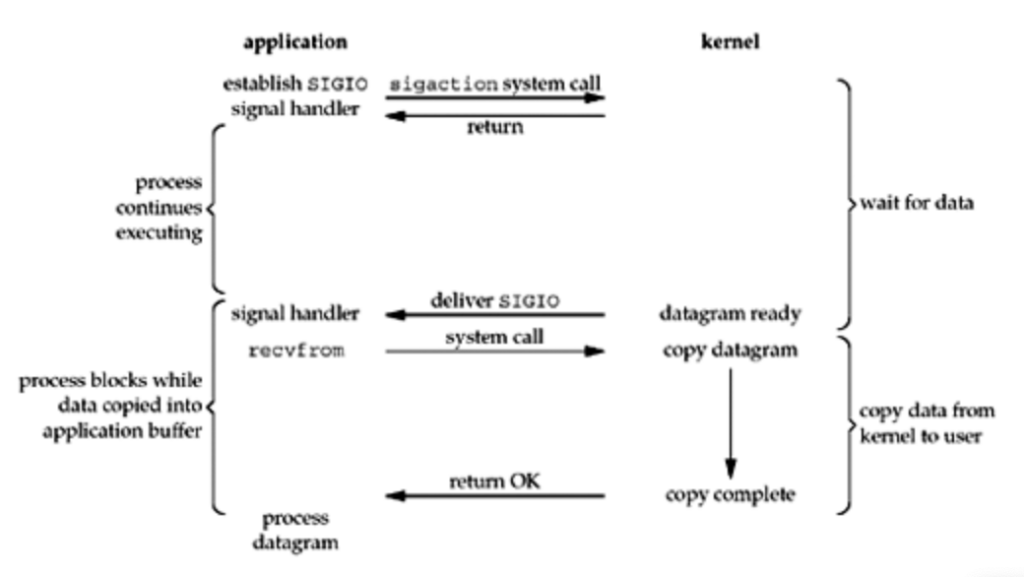

At this point, you’ve learned that blocking, non-blocking, and even multiplexing I/O models still require the main thread to fetch the state of the socket(s) when data is available to read. You might wonder, “Why do I have to poll sockets for their state, which blocks the main thread? Is there a better way to handle this?”

The answer is yes. Instead of polling the state of the sockets, why not have the kernel allow sockets themselves to notify the application when data becomes available? This approach is called the event-driven I/O model, where sockets are empowered to send a signal indicating that data is ready to be read.

As shown in the figure above, the process can continue performing other tasks without waiting for data to become available in the sockets. This is a significant improvement, as it allows the main thread to handle other tasks concurrently in the main loop.

To use this I/O mode, Linux support SIGIO signal which is an indication of possible I/O. The SIGIO system call is implemented following the POSIX standards in Linux.

Show me the code

I am going to use sigaction system call to wait for the SIGIO signal to be sent from one of the sockets. sigaction allows you to specify a custom function (signal handler) that will be called when a specific signal is received by the process. You can find the manual of the sigaction here

I put the source code of this demo here

| Object | Objective |

| Client | Send requests to 5 sockets with ports from 8081 to 8085 |

| Server | Waiting for the SIGIO to be sent from one of the sockets when data is available to read. Upon receiving the signal, the application uses recevfrom system call to copy the data from kernel space to application space and prints out the message. |

Server.c

#define MAX_SOCKETS 5

#define BUFFER_SIZE 1024

int sockets[MAX_SOCKETS];

struct sockaddr_in client_addr;

socklen_t client_len = sizeof(client_addr);

// Signal handler for SIGIO

void handle_sigio(int signo) {

char applicationBuffer[BUFFER_SIZE];

for (int i = 0; i < MAX_SOCKETS; i++) {

int received_byte = recvfrom(sockets[i], applicationBuffer, sizeof(applicationBuffer) - 1, 0,

(struct sockaddr *)&client_addr, &client_len);

if (received_byte > 0) {

applicationBuffer[received_byte] = '\0'; // Null-terminate the string

printf("Received message on socket %d: %s\n", i, applicationBuffer);

char client_ip[INET_ADDRSTRLEN];

inet_ntop(AF_INET, &client_addr.sin_addr, client_ip, sizeof(client_ip));

printf("Message from client on socket %d: %s:%d\n", i, client_ip, ntohs(client_addr.sin_port));

} else if (received_byte == -1 && errno != EWOULDBLOCK) {

perror("recvfrom failed");

}

}

}

int read_event_driven_IO() {

struct sockaddr_in server_addr;

// Initialize sockets

for (int i = 0; i < MAX_SOCKETS; i++) {

sockets[i] = socket(AF_INET, SOCK_DGRAM, 0);

if (sockets[i] == -1) {

perror("socket");

return 1;

}

// Define server address for each socket

server_addr.sin_family = AF_INET;

server_addr.sin_addr.s_addr = INADDR_ANY;

server_addr.sin_port = htons(8081 + i);

// Bind the socket to the specified port and IP address

if (bind(sockets[i], (struct sockaddr *)&server_addr, sizeof(server_addr)) == -1) {

perror("bind failed");

close(sockets[i]);

return 1;

}

printf("Socket %d is running on port %d...\n", i, 8081 + i);

// Set socket to non-blocking mode

int flags = fcntl(sockets[i], F_GETFL, 0);

fcntl(sockets[i], F_SETFL, flags | O_NONBLOCK);

// Set the process as the owner of the socket for signals

fcntl(sockets[i], F_SETOWN, getpid());

// Enable asynchronous I/O

fcntl(sockets[i], F_SETFL, flags | O_ASYNC);

}

// Install signal handler for SIGIO

struct sigaction sa;

sa.sa_handler = handle_sigio;

sa.sa_flags = 0;

sigemptyset(&sa.sa_mask);

if (sigaction(SIGIO, &sa, NULL) == -1) {

perror("sigaction");

return 1;

}

while (1) {

printf("Doing something else while waiting for data in sockets\n");

sleep(1);

}

return 0;

}

Client.c

int client_socket;

int message_count = 100; // Number of messages to send

int SERVER_PORT = 8081;

char SERVER_IP[] = "127.0.0.1";

int BUFFER_SIZE = 1024;

int PORT_RANGE = 5;

struct sockaddr_in server_addr;

char buffer[BUFFER_SIZE];

// Create a UDP socket

client_socket = socket(AF_INET, SOCK_DGRAM, 0);

if (client_socket < 0) {

perror("Socket creation failed");

exit(EXIT_FAILURE);

}

// Set up the server address structure

server_addr.sin_family = AF_INET;

if (inet_pton(AF_INET, SERVER_IP, &server_addr.sin_addr) <= 0) {

perror("Invalid server IP address");

close(client_socket);

exit(EXIT_FAILURE);

}

// Send multiple messages to the server

for (int i = 0; i < message_count; i++) {

int random_port = SERVER_PORT + (rand() % PORT_RANGE); // Random port between 8081 and 8085

server_addr.sin_port = htons(random_port);

snprintf(buffer, BUFFER_SIZE, "Message %d from client", i + 1);

ssize_t sent_bytes = sendto(client_socket, buffer, strlen(buffer), 0,

(struct sockaddr *)&server_addr, sizeof(server_addr));

if (sent_bytes < 0) {

perror("sendto failed");

} else {

printf("Sent: %s\n", buffer);

}

}

printf("All messages sent.\n");

// Close the socket

close(client_socket);

return 0;Result from server

In the result snippet below, you can witness that the main thread is not blocked at all while waiting for data to be sent to the sockets. This can make the main thread do something else without being in idle state.

➜ server git:(master) ✗ gcc -o program main.c eventdrivenio.c

➜ server git:(master) ✗ ./program

Socket 0 is running on port 8081...

Socket 1 is running on port 8082...

Socket 2 is running on port 8083...

Socket 3 is running on port 8084...

Socket 4 is running on port 8085...

Doing something else while waiting for data in sockets

Doing something else while waiting for data in sockets

Doing something else while waiting for data in sockets

Doing something else while waiting for data in sockets

Received message on socket 0: Message 5 from client

Message from client on socket 0: 127.0.0.1:57347

Received message on socket 1: Message 29 from client

Message from client on socket 1: 127.0.0.1:57347

Received message on socket 2: Message 1 from client

Message from client on socket 2: 127.0.0.1:57347

Received message on socket 3: Message 3 from client

Message from client on socket 3: 127.0.0.1:57347

Received message on socket 4: Message 2 from client

Message from client on socket 4: 127.0.0.1:57347

Received message on socket 0: Message 11 from clientModel 5: Asynchronous I/O model

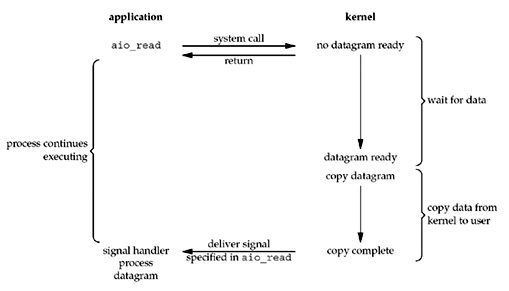

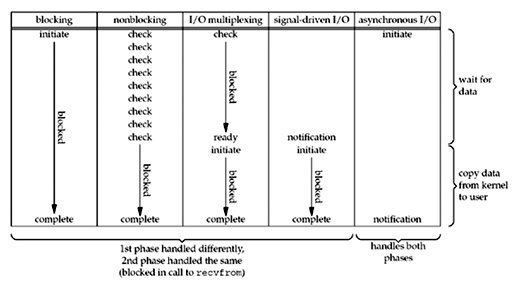

We have 2 phases for I/O operation in each I/O model mentioned in this series, which are:

- Phase 1: Monitoring for availability of File Descriptors (FDs).

- Phase 2: Copying the data from kernel space to application space.

Though the blocking, non-blocking, multiplexing, or signal-driven I/O model can prevent the application thread not to being blocked by the monitoring FDs phase, the copying phase is still blocked. Our question is: Are there any ways to achieve non-blocking I/O operation for both phases?

The answer is YES. In this model, the kernel returns the entire data copied from the socket to the application, which means the entire I/O process is not blocked read/write phase in the application anymore. In other words, this asynchronous I/O model handles both phases in the kernel.

This is great. The application thread is no longer blocked from checking data availability, and it does not need to pull the data by itself. Both operations are handled by the kernel automatically. Magic!

To implement this model, we can simply call aio_read() function. When the data is copied to the application space by the kernel, the kernel will send a signal to inform the application that the data is ready to be read.

#include <aio.h>

struct aiocb cb;

char buffer[100];

cb.aio_fildes = fd;

cb.aio_buf = buffer;

cb.aio_nbytes = sizeof(buffer);

cb.aio_offset = 0;

// This thread will not be blocked by aio_read() call

aio_read(&cb);

// The reading of the data is still in progress

while (aio_error(&cb) == EINPROGRESS) {

// wait or do other work

doOtherThing()

}

// The data is ready to be read dirrectly in application space (Without trafering data from kernel to application space)

int data = aio_return(&cb);

The table below shows the differences between the I/O models mentioned in this series.

Signal-driven and Asynchronous I/O model are perfect. Right? Not so fast!!!!

While signal-driven and asynchronous I/O model sound good on paper but everything in software has tradeoffs that you have to make.

For example, the Signal-driven I/O model is hard to write and maintain in real-world software. Multiple signals could be fired at the same time, corresponding to multiple open sockets (file descriptors). In the application layer, you don’t know which one triggered the signal; this, I argue, could increase the complexity and error-prone.

And about the Asynchronous I/O model (AIO), at the time of writing this blog, there is no way to call aio_read in Linux due to the design of this function to be used for disk I/O instead. In other words, we can’t use AIO in Linux for reading/writing basic File descriptors (FD) such as sockets or pipes.

How do modern software systems typically handle file descriptors?

Redis, Nginx, NodeJS (libuv), Golang (netpoll package) are notoriously well-known for implementing the I/O multiplexing model. Yeah, you read it right, the third model mentioned in this series: I/O multiplexing model

Before going into details of how these mentioned program above implement I/O multiplexing model, I want introduce a new function in Linux called epoll as a second option beside poll and select

The drawbacks of poll() and select()

Let consider the usage of poll in this snippet and sequence diagram below again:

int sockets[1024];

// Init socket array

for (int i = 0; i < MAX_SOCKETS; i++) {

sockets[i] = socket(AF_INET, SOCK_DGRAM, 0);

// Defining server address for each socket ....

// Bind each configured socket to a port ...

}

// Set up the poll structure for each socket

// https://man7.org/linux/man-pages/man2/poll.2.html

/*

struct pollfd {

int fd; file descriptor

short events; requested events

short revents; returned events

};

*/

struct pollfd fds[MAX_SOCKETS];

for (int i = 0; i < MAX_SOCKETS; i++) {

fds[i].fd = sockets[i];

fds[i].events = POLLIN; // POLLIN signal rerpresents data is avaialble to read.

}

while (1) {

// This poll() function call block the current thread

ready_socket = poll()

// Data is ready in a socket, we need to find and get the data from that socket

for (int i = 0; i < 1024; i++) {

if (fds[i].revents & POLLIN) {

// Data is ready to be read on socket i

int received_byte = recvfrom(sockets[i], applicationBuffer, sizeof(applicationBuffer) - 1, 0,

(struct sockaddr *)&client_addr, &client_len);

if (received_byte > 0){

printf("Message from client on socket %d: %s:%d\n", i, client_ip, ntohs(client_addr.sin_port));

}

}

}

From the sequence diagram above, poll() needs to scan through the whole File Descriptors array to check for available sockets to pull the data from. Imagine we have 10,000 connections to a server. This means we would have more than 10,000 sockets (file descriptors) openning at the same time. Each time poll() is called, it has to check all these sockets which makes the current thread completely blocked, which reduces system efficiency and leads to performance degradation.

For that reason, the time complexity for poll() function is O(N), where N is the number of sockets that poll need to check each time.

Handling multiple sockets efficiently withepoll()

What does epoll() do?

From https://man7.org/linux/man-pages/man7/epoll.7.html

The epoll API performs a similar task to poll(2): monitoring multiple file descriptors to see if I/O is possible on any of them. The epoll API can be used either as an edge-triggered or a level-triggered interface and scales well to large numbers of watched file descriptors.

epoll() is a Linux-specific system call that monitors multiple file descriptors (FDs) simultaneously. We have the same functionality as epoll() system calls in other OSs, such as kqueue() in BSD/MacOS or IOCP() in Windows.

There are 3 critical system calls that manage epoll instance:

- epoll_create: create

epollinstance - epoll_ctl: This function registers File Descriptors to

epollinstance - epoll_wait: Wait for I/O event, block the calling thread if no events are currently available.

The pseudo-code snippet below illustrates a simple usage of epoll instance on monitoring socket file descriptors:

epollfd = epoll_create1(0)

for each socket to monitor:

create socket

set non-blocking if needed

epoll_ctl(epollfd, ADD, socket_fd, event_type)

loop forever:

events = epoll_wait(epollfd)

for each ready event in events:

if event is on listening socket:

accept new connection

epoll_ctl(epollfd, ADD, new_conn_fd, READ_EVENT)

else:

handle data on event.fdBelow is an example of epoll usage (Source: https://man7.org/linux/man-pages/man7/epoll.7.html):

// Source: https://man7.org/linux/man-pages/man7/epoll.7.html

#define MAX_EVENTS 10

struct epoll_event ev, events[MAX_EVENTS];

int listen_sock, conn_sock, nfds, epollfd;

/* Code to set up listening socket, 'listen_sock',

(socket(), bind(), listen()) omitted. */

epollfd = epoll_create1(0);

if (epollfd == -1) {

perror("epoll_create1");

exit(EXIT_FAILURE);

}

ev.events = EPOLLIN;

ev.data.fd = listen_sock;

if (epoll_ctl(epollfd, EPOLL_CTL_ADD, listen_sock, &ev) == -1) {

perror("epoll_ctl: listen_sock");

exit(EXIT_FAILURE);

}

for (;;) {

nfds = epoll_wait(epollfd, events, MAX_EVENTS, -1);

if (nfds == -1) {

perror("epoll_wait");

exit(EXIT_FAILURE);

}

for (n = 0; n < nfds; ++n) {

if (events[n].data.fd == listen_sock) {

conn_sock = accept(listen_sock,

(struct sockaddr *) &addr, &addrlen);

if (conn_sock == -1) {

perror("accept");

exit(EXIT_FAILURE);

}

setnonblocking(conn_sock);

ev.events = EPOLLIN | EPOLLET;

ev.data.fd = conn_sock;

if (epoll_ctl(epollfd, EPOLL_CTL_ADD, conn_sock,

&ev) == -1) {

perror("epoll_ctl: conn_sock");

exit(EXIT_FAILURE);

}

} else {

do_use_fd(events[n].data.fd);

}

}

}The benefit of epoll() over poll() or select() is it does not need to repeatedly scan through the whole registered sockets (FDs). So we can have amortized run time complexity O(logN) in the monitoring phase. Behind the scenes, the kernel often maintains a special data structure called red-black tree for File Descriptor storage. In other words, it has the capability to remember all the registered sockets and only notifies the application (sending a signal) when there is data in a socket.

Level-triggered and edge-triggered modes in epoll()

I can immediately point out 2 points that make the difference between these two modes:

Level-triggered mode:

- Application is notified during the data is still available in a socket. The application has to read all data in the socket into its buffer to stop the notifications. Put differently, the

epoll.wait()call always has the data returned due to indefinite notifications received from the level-triggeredepollinstance. - It has the same semantics with

poll()

Edge-trigger mode:

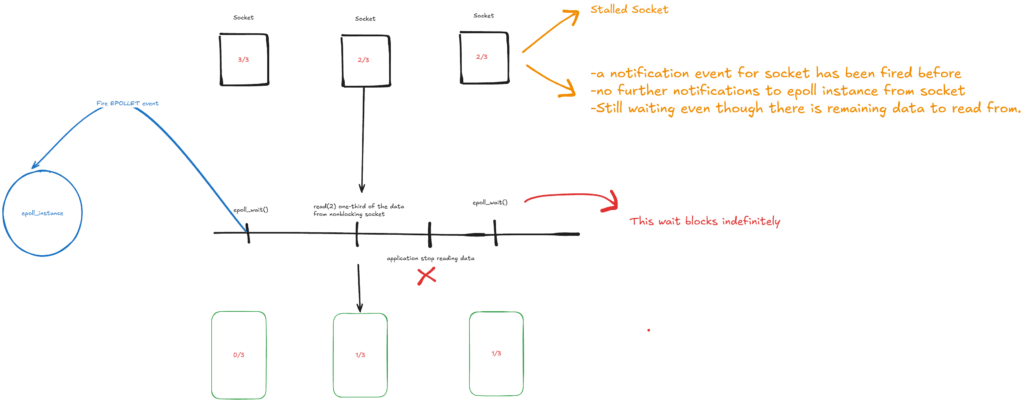

- In contrast,

epollinstance is notified only once when the FD’s state is changed from not ready to ready. The application needs to read or write all data immediately, or it will not be notified again in the future. This means the buffer of FDs needs to be cleared if reading, or fully written if writing. This could introduce bugs as socket data is never read from or written to. As a consequence, the call to epoll.wait() could block infinitely if the application only reads a portion of data.

Edge-triggered epoll() in practice.

epoll() level-triggered mode behaves similarly to poll() or select()—It continuously notifies the application as long as a file descriptor is ready. It’s easier to write and maintain code using this mode, especially for simple applications. However, if you’re building a high-performance system that needs to handle millions of requests per second, you should consider using epoll() edge-triggered mode as I do. This mode is a critical component in modern software architectures due to its efficiency and scalability.

Edge-triggered epoll() is used in several high-performance, event-driven applications, including:

Node.js (libuv) – the cross-platform async I/O library powering Node.js

NGINX – a high-performance HTTP and reverse proxy server

Redis – single-threaded in design, using epoll for event handling

Go (netpoll package) – for scalable network I/O

I am going to dissect the implementation of epoll() in each repository above in another series. For now, let’s learn how to use epoll() in edge-triggered mode.

To use epolledge-triggered mode, simply set EPOLLET event flag for when registering FDs in epoll_ctl

epollfd = "random FD, could be a socket"

ev.events = EPOLLIN | EPOLLET;

ev.data.fd = conn_sock;

if (epoll_ctl(epollfd, EPOLL_CTL_ADD, conn_sock,

&ev) == -1) {

perror("epoll_ctl: conn_sock");

exit(EXIT_FAILURE);

}The snippet above sets two event flags for struct epoll_event

- EPOLLIN: The associated file is available for read(2) operations.

- EPOLLET: Requests edge-triggered notification for the associated file descriptor.

Then it is registeredepollfd FD to epoll instance using EPOLL_CTL_ADD as op argument for adding an entry to the interest list of the epoll file descriptor.

Once the registration setup is done. We’re going to have an infinite for loop to listen to file descriptor changes.

#define MAX_EVENTS 10

struct epoll_event ev, events[MAX_EVENTS];

int listen_sock, conn_sock, nfds, epollfd;

/* Code to set up listening socket, 'listen_sock',

(socket(), bind(), listen()) omitted. */

epollfd = epoll_create1(0);

if (epollfd == -1) {

perror("epoll_create1");

exit(EXIT_FAILURE);

}

ev.events = EPOLLIN;

ev.data.fd = listen_sock;

if (epoll_ctl(epollfd, EPOLL_CTL_ADD, listen_sock, &ev) == -1) {

perror("epoll_ctl: listen_sock");

exit(EXIT_FAILURE);

}

for (;;) {

nfds = epoll_wait(epollfd, events, MAX_EVENTS, -1);

if (nfds == -1) {

perror("epoll_wait");

exit(EXIT_FAILURE);

}

for (n = 0; n < nfds; ++n) {

if (events[n].data.fd == listen_sock) {

conn_sock = accept(listen_sock,

(struct sockaddr *) &addr, &addrlen);

if (conn_sock == -1) {

perror("accept");

exit(EXIT_FAILURE);

}

setnonblocking(conn_sock);

ev.events = EPOLLIN | EPOLLET;

ev.data.fd = conn_sock;

if (epoll_ctl(epollfd, EPOLL_CTL_ADD, conn_sock,

&ev) == -1) {

perror("epoll_ctl: conn_sock");

exit(EXIT_FAILURE);

}

} else {

do_use_fd(events[n].data.fd);

}

}

}

The for loop listens for multiple sockets(file descriptors), which have been registered to epoll the instance beforehand. Then, if there is any change in any sockets, we read the data from them by calling the do_use_fd(events[n].data.fd) function. Please note that we are using edge-leveled triggered epoll mode; therefore, we will only receive the notification once.

Suppose we read one-third of the data from a monitored socket, for the next for loop, we won’t get any further notification. As a result, the epoll_wait(epollfd, events, MAX_EVENTS, -1); may block indefinitely and may not return at all, because no events are triggered, even though data is still available to read. This issue leads to a stalled socket where the data cannot be read completely.

You can find the edge-triggered epoll example above here.

How to avoid epoll_wait blocks indefinitely in level-triggered mode?

The answer to the problem is pretty simple: Save the state of the reading/writing progress to the socket, so you can continue from that checkmark later.

You really don’t want your application to attempt reading all available data from the socket every single time. Moreover, if the client keeps sending the data to the sockets continuously, the application does not know when the transmission is complete.

It is the application layer’s responsibility the implement the mechanism to read/write the data from/to the socket into chunks for every epoll_wait calls. To achieve this, from Linux man page, we have to define epoll instance interface is as follows:

(1) with nonblocking file descriptors; and

(2) by waiting for an event only after read(2) or write(2) return

EAGAIN.

The sockets registered to epoll the instance are non-blocking sockets, which means the invoke to either read(2) or write(2) will return immediately.

EAGAIN is the error code returned from read(2) or write(2), which simply indicates there is nothing to read/write from this socket (file descriptor) right now.

In conclusion, here is how epoll level-triggered works:

For every for loop, the application reads from the socket until it returns EAGAIN error. If the application can not read the entire data from the point it pulls the data to its input buffer, it saves the state of the partial read progress in memory. If the data keeps pouring into the socket from clients, we will read these chunks later when we have a brand new notification to epoll level-triggered instance.

This I/O multiplexing model, which uses epoll on Linux, makes the application more efficient and scalable, as it allows data to be divided into manageable chunks and processed sequentially.

References:

https://notes.shichao.io/unp/ch6/#io-models

https://man7.org/linux/man-pages/man7/epoll.7.html

The Linux Programming Interface – Book by Michael Kerrisk

![PlantUML Syntax:</p>

<p>actor Client<br />

participant “Application space” as App<br />

participant “Kernel” as Kernel</p>

<p>== Setup ==<br />

App -> Kernel : Setup fds[0..N-1] with socket[i], events = POLLIN<br />

note right of App<br />

Application initializes an array of pollfd structures<br />

to monitor all sockets for incoming data.<br />

end note</p>

<p>== Polling Loop ==<br />

Client -> App : Sends data to socket[i]<br />

activate App<br />

loop while (true)</p>

<p> App -> Kernel : Call poll(fds[0..N-1], timeout)<br />

note right of App<br />

This poll() call blocks the<br />

current application thread<br />

end note<br />

activate Kernel<br />

Kernel -> Kernel : Scan fds for readines (O(N))</p>

<p> Kernel –> App : ready_count (number of sockets avaialble for reads)<br />

deactivate Kernel</p>

<p> loop for i = 0 to N-1<br />

alt fds[i].revents & POLLIN<br />

App -> App : recvfrom(socket[i])<br />

App -> App : Process data / printf(…)<br />

end<br />

end<br />

deactivate App<br />

end</p>

<p>](http://www.plantuml.com/plantuml/img/TL9BQzmm4BxxLmm911kIxRQvX0O7IawbosQXlOMbQELnF4IMZ3JUaFxwpjXUrmkfBhOqh-yncR4S8ZmwGizPPoAJfSvuXejlNUV86gRW8NN6uWMO1F9wdlO3eqStnCRVh2pX9tBVGLbcaWut3rC4law1kahFwzLgU_Dv3s_43QHWNv6VQNyDU1Ga2KhOFMqstxUP3umGwQLX2FKm7c09ZJmn6KT_C87nO68qxvhP1UVg2XB7td8VCKaT1sY3v0jZuzmqDK4jT_8sjEHVe39iLXdw2dIoajb99uriGXWuZM8fBMMdd7oLXZeTC5F9H5ew6a6le9qMlpNa47A1X8N06OYSoVEei1Hvlf3e6fXQ33sFDHy9CfvV3QMnkW2hdGvEm2JW1kSSsyUewCr2GcuYccf8cNdhPIOm6Qjl4zGpJwteBdW77RKJUV4YVygtHN5MCokcIU-_RUW5IExxze1HkPpyC4SZZXv4gbF7D3Qgy2D8WxI3Xx8rQ_L0L9i96yV3lj5-5QVTkhfStzxTdnOC5cVouuJI7kiOsdosjFX_yYu6YsbQWq_GHV9SvrUNTrzkr_V_waJVRFbTC58dzVal)